What is kubernetes?

• Kubernetes is a portable, extensible open-source platform for managing containerized workloads and services, that facilitates both declarative configuration and automation.

• It has a large, rapidly growing ecosystem. Kubernetes services, support, and tools are widely available.

• Google open-sourced the Kubernetes project in 2014. Kubernetes builds upon a decade and a half of experience that Google has with running production workloads at scale, combined with best-of-breed ideas and practices from the community.

• The name Kubernetes originates from Greek, meaning helmsman or pilot, and is the root of governor and cybernetic.

Why k8s?

With modern web services, users expect applications to be available 24/7, and developers expect to deploy new versions of those applications several times a day. Containerization helps package software to serve these goals, enabling applications to be released and updated in an easy and fast way without downtime. Kubernetes helps you make sure those containerized applications run where and when you want and helps them find the resources and tools they need to work.

It has a number of features. It can be thought of as:

• a container platform

• a microservices platform

• a portable cloud platform and a lot more.

Kubernetes provides a container-centric management environment. It orchestrates computing, networking, and storage infrastructure on behalf of user workloads. This provides much of the simplicity of Platform as a Service (PaaS) with the flexibility of Infrastructure as a Service (IaaS) and enables portability across infrastructure providers.

Why Containers?

• Agile application creation and deployment: Increased ease and efficiency of container image creation compared to VM image use.

• Continuous development, integration, and deployment: Provides for reliable and frequent container image build and deployment with quick and easy rollbacks (due to image immutability).

• Dev and Ops separation of concerns: Create application container images at build/release time rather than deployment time, thereby decoupling applications from infrastructure.

• Observability: Not only surfaces OS-level information and metrics, but also application health and other signals.

• Environmental consistency: across development, testing, and production: Runs the same on a laptop as it does in the cloud.

• Cloud and OS distribution portability: Runs on Ubuntu, RHEL, CoreOS, on-prem, Google Kubernetes Engine, and anywhere else.

• Application-centric management: Raises the level of abstraction from running an OS on virtual hardware to running an application on an OS using logical resources.

• Loosely coupled, distributed, elastic, liberated micro-services: Applications are broken into smaller, independent pieces and can be deployed and managed dynamically – not a fat monolithic stack running on one big single-purpose machine.

• Resource isolation: Predictable application performance.

• Resource utilization: High efficiency and density.

Architecture of a Kubernetes Cluster

Figure 1.1 Kubernetes Architecture

Figure 1.1 Kubernetes Architecture

• The master node, which hosts the Kubernetes Control Plane that controls and manage the whole Kubernetes system

• Worker nodes that run the actual applications you deploy

Master Node

The Master is what controls the cluster and makes it function. It consists of multiple components that can run on a single master node or be split across multiple nodes and replicated to ensure high availability. These components are;

• The Kubernetes API Server, which you and the other Control Plane components communicate with.

• The Scheduler, which schedules your apps (assigns a worker node to each deployable component of your application)

• The Controller Manager, which performs cluster-level functions, such as replicating components, keeping track of worker nodes, handling node failures, and so on

• etcd, Consistent and highly-available key-value store used as Kubernetes’ backing store for all cluster data.

The components of the Control Plane hold and control the state of the cluster, but they don’t run your applications. This is done by the (worker) nodes.

The Worker Nodes

The worker nodes are the machines that run your containerized applications. The

task of running, monitoring, and providing services to your applications is done by

the following components:

• Docker, rkt, or another container runtime, which runs your containers

• The Kubelet, an agent that runs on each node in the cluster, which talks to the API server and manages containers on its node

• The Kubernetes Service Proxy (kube-proxy), which load-balances network traffic

between application components

Kubernetes objects

A Kubernetes object is a single unit that exists in the cluster. Objects include deployments, replica sets, services, pods and much more. When creating an object you’re telling the Kubernetes cluster about the desired state you want it to have.

Desired state means the cluster will work to keep it like you specified, even if a node in your cluster fails. Kubernetes will detect this and compensate by spinning up the objects on the remaining nodes in order to restore the desired state. With that understood, let’s define the objects we’ll be working with.

Figure 1.2 Kubernetes exposes the whole data center as a single deployment platform

Figure 1.2 Kubernetes exposes the whole data center as a single deployment platform

Deploying NGINX on Kubernetes cluster

Install a single master Kubernetes cluster using kubeadm or minikube locally.

Pods

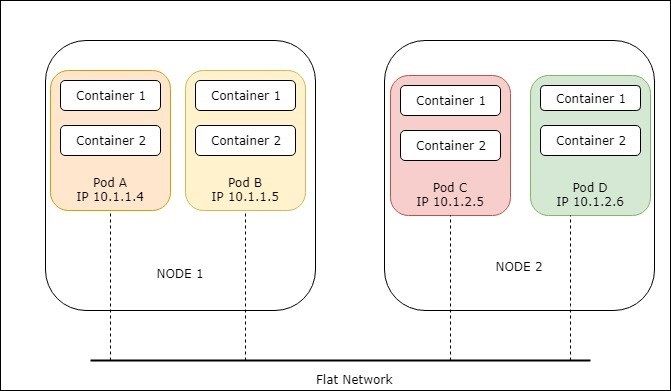

The pod is the most basic of objects that can be found in Kubernetes.

A pod is a collection of containers, with shared storage and network, and a specification on how to run them. Each pod is allocated its own IP address. Containers within a pod share this IP address, port space and can find each other via localhost.

Figure 1.3 Pods

Figure 1.3 Pods

Create a Deployment:

The deployments resource provides a mechanism for declaring the desired state of stateless applications and for rolling out changes to the desired state at a controlled rate.

kubectl run nginx –image=nginx:1.7.9 –replicas=3 –record

This creates a deployment called nginx. kubectl get deployments lists all available deployments

kubectl get deployments

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE nginx 3 3 3 3 25s

To view the pods

NAME READY STATUS RESTARTS AGE nginx-6bcd55cfd6-6n5v8 1/1 Running 0 2m nginx-6bcd55cfd6-c2m7h 1/1 Running 0 2m nginx-6bcd55cfd6-xw6h5 1/1 Running 0 2m

Use kubectl describe deployment nginx to view more information:

Create a Service:

The service resource enables exposing the deployments both internally within the kubernetes cluster or externally via Load Balancer or Nodeport.

Figure 1.4 Services

Figure 1.4 Services

Make the NGINX container accessible via the internet:

kubectl expose deployment nginx –port=80 –target-port=80 –type=NodePort

This creates a public facing service on the host for the NGINX deployment. Because this is a nodeport deployment, kubernetes will assign this service a port on the host machine in the 30000+ range

Verify that the NGINX deployment is successful by using the browser by entering node ip and port.

The output will show the unrendered “Welcome to nginx!” page HTML.

ReplicaSet:

A ReplicaSet ensures that a specified number of pod replicas are running at any given time

NAME DESIRED CURRENT READY AGE nginx-6bcd55cfd6 3 3 3 5m

Rolling Updates on deployments:

Suppose that you now want to update the nginx Pods to use the nginx:1.9.1 image instead of the nginx:1.7.9 image

kubectl set image deployment/nginx nginx=nginx:1.9.1

deployment.apps “nginx” image updated

Checking Rollout History of a Deployment:

kubectl rollout history deployment/nginx

deployments “nginx-deployment”

REVISION CHANGE-CAUSE 1 kubectl run nginx --image=nginx:1.7.9 --replicas=3 --record=true 2 kubectl set image deployment/nginx-deployment nginx=nginx:1.9.1

Rolling Back to a Previous Revision:

kubectl rollout undo deployment/nginx

or

kubectl rollout undo deployment/nginx-deployment –to-revision=2

Autoscaling:

The Horizontal Pod Autoscaler automatically scales the number of pods in a replication controller, deployment or replica set based on observed CPU utilization.

kubectl autoscale deployment nginx –min=10 –max=15 –cpu-percent=80

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE nginx Deployment/nginx <unknown>/80% 10 15 10 24s

Liveness probes:

Kubernetes can check if a container is still alive through liveness probes. You can specify

a liveness probe for each container in the pod’s specification. Kubernetes will periodically

execute the probe and restart the container if the probe fails.

livenessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 3

periodSeconds: 3

Readiness probes:

The readiness probe is invoked periodically and determines whether the specific

pod should receive client requests or not. When a container’s readiness probe returns

success, it’s signaling that the container is ready to accept requests.

readinessProbe:

httpGet:

path: /

port: 80

initialDelaySeconds: 3

periodSeconds: 3

Conclusion:

The primary goal of Kubernetes is to make it easy for you to build and deploy reliable distributed systems. This means not just instantiating the application once but managing the regularly scheduled rollout of new versions of that software service. Deployments are a critical piece of reliable rollouts and rollout management for your services.

Author:

Sivagandhan Jeyakkodi (B.E., MSc, CKAD, AWS-CSAA, AWS-CSOA, AWS-CDA, RHCSA, CCNA) works in the DevOps team at CMS. His passion is in cloud technologies and Kubernetes.

Sources:

Kubernetes in Action, Marko Luksa.

Kubernetes up and running, Brendan burns, Kelsey Hightower & Joe Beda.